Gemini's Context vs. Claude's Code: Why AI Benchmarks Don't Tell the Whole Story

Don't just look at benchmarks. We compare Claude 3.5's code quality against Gemini 2.5 Pro's massive context window to help you choose the right AI for the job.

The world of software development is in the midst of a tectonic shift, driven by a highly competitive “arms race” among a handful of technology giants to produce the most capable foundation models for coding. This intense rivalry, far from being mere corporate spectacle, is the engine powering staggering improvements in performance, functionality, and cost-effectiveness. For developers and engineering leaders, this isn't just an interesting trend to watch from the sidelines; it's a new reality to navigate.

As we look towards 2025, the primary arena of competition is shaping up between Anthropic's Claude series and Google’s Gemini family. Each model family brings a unique set of strengths to the table, offering distinct advantages depending on the task at hand. While industry-standard benchmarks give us a snapshot of the current state of play, they don't tell the whole story.

The Tale of the Tape: Benchmarks and Bragging Rights

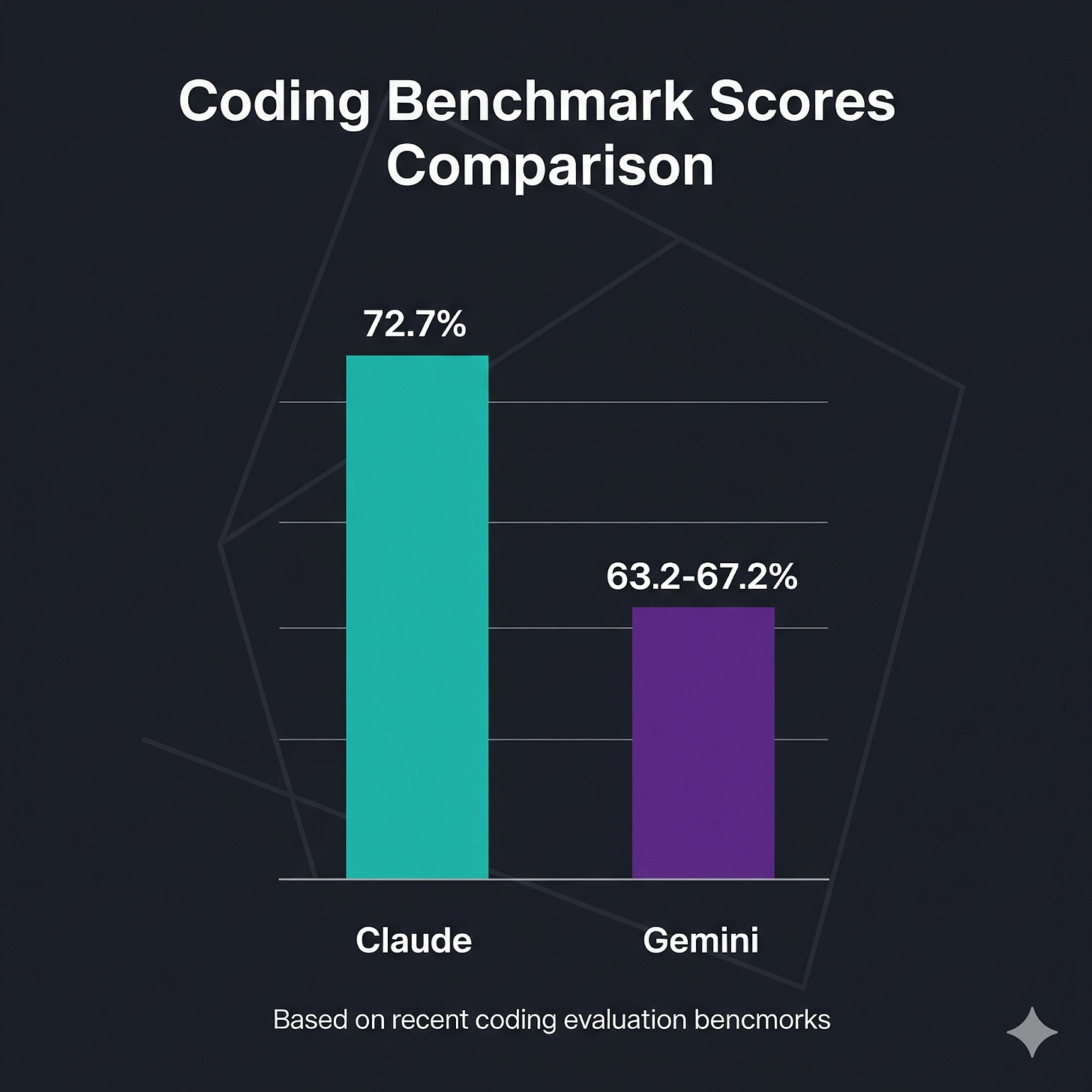

To get a handle on raw coding proficiency, the industry often turns to benchmarks like SWE-bench, a rigorous test that measures a model's ability to resolve real-world GitHub issues. On this critical metric, Anthropic's latest models have established a notable lead. The new Claude 3.5 Sonnet impressively resolves 72.7% of issues, with the flagship Claude 3 Opus right behind it at 72.5%.

Google’s Gemini 2.5 Pro, by comparison, scores between 63.2% and 67.2% on the same benchmark. While this is a formidable score that places it firmly in the top tier, it currently positions Gemini as a strong second-place contender in raw bug-fixing and code generation tasks.

But here’s where it gets interesting. If we stop at the benchmarks, we miss the bigger picture. A purely benchmark-driven analysis is insufficient because qualitative factors and architectural differences reveal a more complex and nuanced trade-off.

Beyond the Numbers: Context Windows and Design Taste

The true art of leveraging these powerful tools lies in understanding their unique characteristics. This is where we see the two model families diverge in philosophy and capability.

Gemini 2.5 Pro’s most significant advantage is its exceptionally large 2 million token context window. This is a game-changer for enterprise-level development. It allows the model to analyze massive, sprawling codebases in their entirety, grasping the full architectural context without the need for complex chunking or retrieval-augmented generation (RAG) workarounds. Imagine feeding an entire legacy system to an AI and asking it to identify dependencies or refactor a core service—that's the power Gemini brings to the table. Furthermore, Gemini is consistently faster, making it the preferred choice for interactive workflows, rapid debugging cycles, and pair programming sessions where speed is of the essence. It also excels at generating user interfaces from visual prompts, translating a sketch or wireframe directly into code.

On the other hand, Anthropic's Claude models, while slower, are frequently praised for something more subjective: genuine design taste. Developers often report that Claude generates more complete, production-ready code that feels architecturally sound and thoughtfully designed. It excels at understanding complex instructions and maintaining context within a given task, making it incredibly reliable for debugging intricate issues. The trade-off? This tendency towards robust solutions can sometimes lead to "over-engineering," where the model produces a more complex or abstract solution than is strictly necessary for a simple problem.

The Codalio Philosophy: Harnessing the Race

So, who wins? The answer is: it depends on the job to be done. The real challenge isn't picking an ultimate "winner," but building a development process that can intelligently leverage the best tool for each specific task.

This is the core of our philosophy at Codalio. We believe that the next frontier of software development isn't just about more powerful AI; it's about creating a structured, context-aware environment where AI can thrive. Our platform is designed to act as a sophisticated orchestration layer, providing the necessary guardrails and maintaining deep project context.

Maintaining Context: By understanding your user stories, data models, and architectural choices, Codalio ensures that whichever underlying model is used, its output is always relevant and aligned with your project's unique requirements. This mitigates the risk of generic, out-of-context code.

Generating Usable Code: We don't just pass a prompt to an API. We integrate the AI into a complete Software Development Lifecycle (SDLC), leveraging established processes and our open-source Rhino foundation to ensure the generated code is not just correct, but production-ready, maintainable, and scalable.

Providing Guardrails: We use a combination of automated checks, linters, and human-in-the-loop oversight to guide the AI. This allows us to harness the raw power of models like Claude and Gemini while ensuring the final output is accurate, reliable, and secure.

The AI coding arms race will only continue to accelerate. New models will break old benchmarks, and new capabilities will unlock previously unimaginable workflows. Instead of getting caught in the crossfire, the winning strategy is to adopt a platform that can navigate this dynamic landscape for you, a platform that understands your goals and can deploy the right AI for the right task, every time.

Ready to move beyond the hype and build better software, faster? Explore how Codalio is building the future of AI-powered development.

We’re Codalio 🚀

Our mission is simple: help non-technical founders turn ideas into scalable MVPs, just by typing them in.

Along the way, Codalio helps you craft:

Your Elevator Pitch

Website Structure

Data Model

User Personas

User Stories

Product Roadmap

Market Sizing Analysis

All in one place. No code, no overwhelm.

👉 Sign up free today — no credit card required.

Really good overview and benchmarking of Claude Code vs Gemini